GenAI & Data Security

Generative AI (GenAI) is driving the potential for new growth and cost reduction for many companies. For those companies in industries with privacy regulations (PCI for credit cards and PHI in Healthcare to name just two), the promise of Gen AI is limited by the risk and exposure of sensitive personal identifiable information (PII).

GenAI increases the surface area for misunderstandings in data security. Companies are just beginning to understand the data flow associated with these models, along with the stages of AI development and deployment that each carry unique risks at their respective levels.

By understanding pitfalls in data security, you can begin to uncover better business value in AI without compromising sensitive information.

The AI Data Security Challenge

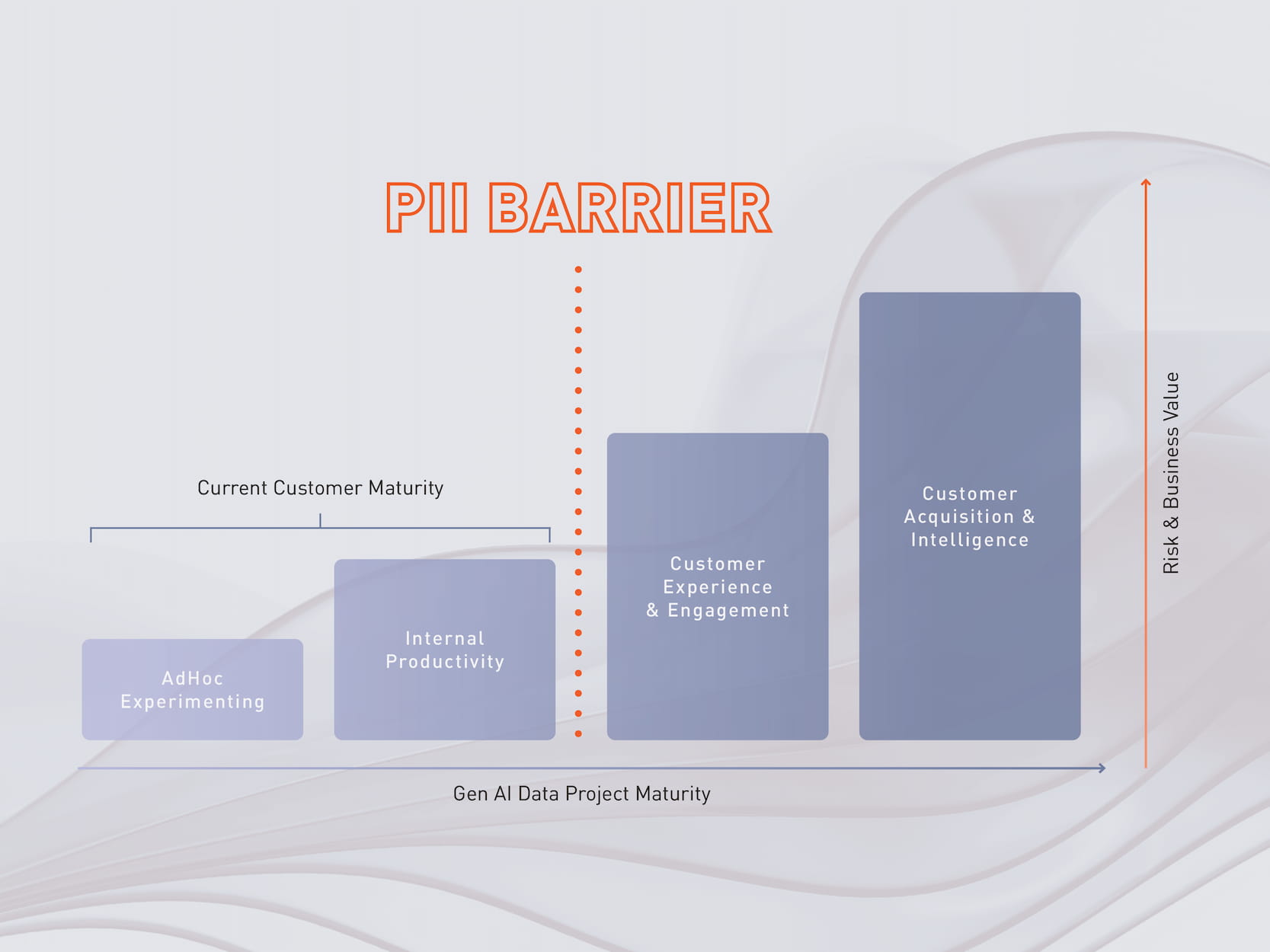

Organizations recognize the transformative opportunities of GenAI and are seeking to increase the value of their data leveraging different Language Models (LLMs, SLMs) and even creating their own. The first types of projects are experimental or focused on internal productivity. As companies look to accelerate the potential of GenAI, they will seek to do more complicated projects for customer experience, customer acquisition, and deeper forms of customer intelligence. To accomplish those projects, they will likely encounter a “PII Barrier”. They have data to analyze, which is connected with sensitive information (such as customer or patient records, financial data or other identifiers) that must meet compliance requirements. To attain both the value of the analytics and maintain data privacy standards, a solution to protect the data will be required.

Why AI Data Protection Matters

Businesses should make full use of GenAI and emerging analytics without worrying about whether data is fully secure and compliant with regulations that govern privacy. There are a number of ways to do this. Some of the most common data protection techniques offer fast protection, but reduce the value of the data in the process.

- You can redact all sensitive data, an approach by native Cloud Service Providers. This reduces the risk associated with data privacy, but does not allow customers to realize any value from that data.

- You can anonymize data, including the creation of synthetic data. This reduces risk, but by anonymizing data, customers lose the reversibility and referenceability of the data, dramatically reducing its value.

- You can use tokens, or pseudonymize data. This approach enables data reversibility and referenceability, which also supports a growing and changing data set over time.

Protegrity’s Approach to Your Gen AI Project

Protegrity offers data security into the widest technology stack and with centralized policy management to meet the customer where their data privacy and security need to be.

Protegrity approaches these projects in the following way:

- Continuously Discovers Valuable Data: Protegrity’s tools find and secure crucial sensitive data for AI, ensuring nothing is missed.

- Streamlines Access and Enforcement: Simplifies data access and policy enforcement for secure and efficient GenAI operations.

- Works Across All Environments: Provides robust protection that integrates seamlessly with on-premises, cloud, and hybrid setups.

- Protects All Types of Data: Uses encryption, tokenization, and masking to secure both structured and unstructured data.

- Accelerates Data Analytics and Innovation: Speeds up data processing for faster insights and innovation without compromising security.